Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- .gitignore +207 -0

- README.md +19 -3

- demo.py +241 -0

- example.webp +0 -0

- face_shape_model.pkl +3 -0

- label_encoder.pkl +3 -0

- requirements.txt +7 -0

- sample_image.jpg +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

sample_image.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,207 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[codz]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py.cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# UV

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include uv.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

#uv.lock

|

| 102 |

+

|

| 103 |

+

# poetry

|

| 104 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 105 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 106 |

+

# commonly ignored for libraries.

|

| 107 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 108 |

+

#poetry.lock

|

| 109 |

+

#poetry.toml

|

| 110 |

+

|

| 111 |

+

# pdm

|

| 112 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 113 |

+

# pdm recommends including project-wide configuration in pdm.toml, but excluding .pdm-python.

|

| 114 |

+

# https://pdm-project.org/en/latest/usage/project/#working-with-version-control

|

| 115 |

+

#pdm.lock

|

| 116 |

+

#pdm.toml

|

| 117 |

+

.pdm-python

|

| 118 |

+

.pdm-build/

|

| 119 |

+

|

| 120 |

+

# pixi

|

| 121 |

+

# Similar to Pipfile.lock, it is generally recommended to include pixi.lock in version control.

|

| 122 |

+

#pixi.lock

|

| 123 |

+

# Pixi creates a virtual environment in the .pixi directory, just like venv module creates one

|

| 124 |

+

# in the .venv directory. It is recommended not to include this directory in version control.

|

| 125 |

+

.pixi

|

| 126 |

+

|

| 127 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 128 |

+

__pypackages__/

|

| 129 |

+

|

| 130 |

+

# Celery stuff

|

| 131 |

+

celerybeat-schedule

|

| 132 |

+

celerybeat.pid

|

| 133 |

+

|

| 134 |

+

# SageMath parsed files

|

| 135 |

+

*.sage.py

|

| 136 |

+

|

| 137 |

+

# Environments

|

| 138 |

+

.env

|

| 139 |

+

.envrc

|

| 140 |

+

.venv

|

| 141 |

+

env/

|

| 142 |

+

venv/

|

| 143 |

+

ENV/

|

| 144 |

+

env.bak/

|

| 145 |

+

venv.bak/

|

| 146 |

+

|

| 147 |

+

# Spyder project settings

|

| 148 |

+

.spyderproject

|

| 149 |

+

.spyproject

|

| 150 |

+

|

| 151 |

+

# Rope project settings

|

| 152 |

+

.ropeproject

|

| 153 |

+

|

| 154 |

+

# mkdocs documentation

|

| 155 |

+

/site

|

| 156 |

+

|

| 157 |

+

# mypy

|

| 158 |

+

.mypy_cache/

|

| 159 |

+

.dmypy.json

|

| 160 |

+

dmypy.json

|

| 161 |

+

|

| 162 |

+

# Pyre type checker

|

| 163 |

+

.pyre/

|

| 164 |

+

|

| 165 |

+

# pytype static type analyzer

|

| 166 |

+

.pytype/

|

| 167 |

+

|

| 168 |

+

# Cython debug symbols

|

| 169 |

+

cython_debug/

|

| 170 |

+

|

| 171 |

+

# PyCharm

|

| 172 |

+

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

| 173 |

+

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

| 174 |

+

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

| 175 |

+

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

| 176 |

+

#.idea/

|

| 177 |

+

|

| 178 |

+

# Abstra

|

| 179 |

+

# Abstra is an AI-powered process automation framework.

|

| 180 |

+

# Ignore directories containing user credentials, local state, and settings.

|

| 181 |

+

# Learn more at https://abstra.io/docs

|

| 182 |

+

.abstra/

|

| 183 |

+

|

| 184 |

+

# Visual Studio Code

|

| 185 |

+

# Visual Studio Code specific template is maintained in a separate VisualStudioCode.gitignore

|

| 186 |

+

# that can be found at https://github.com/github/gitignore/blob/main/Global/VisualStudioCode.gitignore

|

| 187 |

+

# and can be added to the global gitignore or merged into this file. However, if you prefer,

|

| 188 |

+

# you could uncomment the following to ignore the entire vscode folder

|

| 189 |

+

# .vscode/

|

| 190 |

+

|

| 191 |

+

# Ruff stuff:

|

| 192 |

+

.ruff_cache/

|

| 193 |

+

|

| 194 |

+

# PyPI configuration file

|

| 195 |

+

.pypirc

|

| 196 |

+

|

| 197 |

+

# Cursor

|

| 198 |

+

# Cursor is an AI-powered code editor. `.cursorignore` specifies files/directories to

|

| 199 |

+

# exclude from AI features like autocomplete and code analysis. Recommended for sensitive data

|

| 200 |

+

# refer to https://docs.cursor.com/context/ignore-files

|

| 201 |

+

.cursorignore

|

| 202 |

+

.cursorindexingignore

|

| 203 |

+

|

| 204 |

+

# Marimo

|

| 205 |

+

marimo/_static/

|

| 206 |

+

marimo/_lsp/

|

| 207 |

+

__marimo__/

|

README.md

CHANGED

|

@@ -1,3 +1,19 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Face Shape AI

|

| 2 |

+

|

| 3 |

+

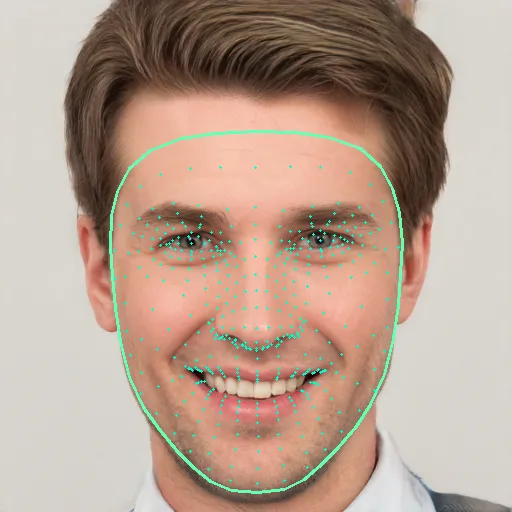

FaceShapeAI predicts your **face shape** from a photo.

|

| 4 |

+

|

| 5 |

+

### Try the app demo

|

| 6 |

+

|

| 7 |

+

- [Face Shape Detector](https://attractivenesstest.com/face_shape)

|

| 8 |

+

- [HF Space](https://huggingface.co/spaces/Rob4343/AIFaceShapeDetector).

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

### Run locally

|

| 13 |

+

|

| 14 |

+

- `python demo.py your_photo.jpg`

|

| 15 |

+

|

| 16 |

+

FaceShapeAI analyzes your photo to detect a face and extract a dense set of facial landmarks (eyes, nose, mouth, jawline, and more). It then lightly normalizes the geometry—correcting small head tilt and scaling the landmarks for consistency across images. Finally, it runs the trained model included in this repo to predict the most likely face shape and prints confidence scores for each class.

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

demo.py

ADDED

|

@@ -0,0 +1,241 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

from pathlib import Path

|

| 4 |

+

from typing import Any, Dict, List, Optional, Sequence, Tuple, Union

|

| 5 |

+

|

| 6 |

+

import cv2

|

| 7 |

+

import mediapipe as mp

|

| 8 |

+

import numpy as np

|

| 9 |

+

import pickle

|

| 10 |

+

import argparse

|

| 11 |

+

|

| 12 |

+

# Paths to model files (assumed to be in the same directory as this script)

|

| 13 |

+

PROJECT_DIR = Path(__file__).parent

|

| 14 |

+

MODEL_FILE = PROJECT_DIR / 'face_shape_model.pkl'

|

| 15 |

+

LABEL_ENCODER_FILE = PROJECT_DIR / 'label_encoder.pkl'

|

| 16 |

+

|

| 17 |

+

Keypoint = Dict[str, float]

|

| 18 |

+

NormalizedLandmark = Tuple[float, float, float]

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

def normalize_landmarks(keypoints: Sequence[Keypoint], width: int, height: int) -> List[NormalizedLandmark]:

|

| 22 |

+

"""

|

| 23 |

+

Normalize keypoints to be centered, roll-corrected, and scaled.

|

| 24 |

+

Retains 3D coordinates (Z) but aligns to the 2D plane based on eyes.

|

| 25 |

+

|

| 26 |

+

Returns list of tuples: [(x, y, z), ...]

|

| 27 |

+

|

| 28 |

+

(Copied from create.py to ensure consistent preprocessing)

|

| 29 |

+

"""

|

| 30 |

+

if not keypoints:

|

| 31 |

+

return []

|

| 32 |

+

|

| 33 |

+

# Convert to numpy array (N, 3)

|

| 34 |

+

landmarks = np.array([[kp["x"], kp["y"], kp["z"]] for kp in keypoints])

|

| 35 |

+

|

| 36 |

+

# Denormalize x, y, z to pixel/aspect-correct coordinates

|

| 37 |

+

# MediaPipe Z is roughly same scale as X (relative to image width)

|

| 38 |

+

landmarks[:, 0] *= width

|

| 39 |

+

landmarks[:, 1] *= height

|

| 40 |

+

landmarks[:, 2] *= width

|

| 41 |

+

|

| 42 |

+

# Indices for irises (refine_landmarks=True gives 478 points)

|

| 43 |

+

# 468: Left Iris Center (Subject's Left, Image Right)

|

| 44 |

+

# 473: Right Iris Center (Subject's Right, Image Left)

|

| 45 |

+

left_iris_idx = 468

|

| 46 |

+

right_iris_idx = 473

|

| 47 |

+

|

| 48 |

+

if len(landmarks) > right_iris_idx:

|

| 49 |

+

left_iris = landmarks[left_iris_idx]

|

| 50 |

+

right_iris = landmarks[right_iris_idx]

|

| 51 |

+

else:

|

| 52 |

+

# Fallback to eye corners if iris landmarks missing

|

| 53 |

+

p1 = landmarks[33] # Left eye outer

|

| 54 |

+

p2 = landmarks[133] # Left eye inner

|

| 55 |

+

left_iris = (p1 + p2) / 2

|

| 56 |

+

p3 = landmarks[362] # Right eye inner

|

| 57 |

+

p4 = landmarks[263] # Right eye outer

|

| 58 |

+

right_iris = (p3 + p4) / 2

|

| 59 |

+

|

| 60 |

+

# 1. Centering: Move midpoint of eyes to origin

|

| 61 |

+

eye_center = (left_iris + right_iris) / 2.0

|

| 62 |

+

landmarks -= eye_center

|

| 63 |

+

|

| 64 |

+

# 2. Rotation (Roll Correction)

|

| 65 |

+

delta = left_iris - right_iris

|

| 66 |

+

dX, dY = delta[0], delta[1]

|

| 67 |

+

|

| 68 |

+

# Calculate angle of this vector relative to horizontal

|

| 69 |

+

angle = np.arctan2(dY, dX)

|

| 70 |

+

|

| 71 |

+

# Rotate by -angle to align with X-axis

|

| 72 |

+

c, s = np.cos(-angle), np.sin(-angle)

|

| 73 |

+

|

| 74 |

+

# Rotation matrix around Z axis

|

| 75 |

+

R = np.array([

|

| 76 |

+

[c, -s, 0],

|

| 77 |

+

[s, c, 0],

|

| 78 |

+

[0, 0, 1]

|

| 79 |

+

])

|

| 80 |

+

|

| 81 |

+

landmarks = landmarks.dot(R.T)

|

| 82 |

+

|

| 83 |

+

# 3. Scaling: Scale such that inter-ocular distance is 1.0

|

| 84 |

+

dist = np.sqrt(dX**2 + dY**2)

|

| 85 |

+

if dist > 0:

|

| 86 |

+

scale = 1.0 / dist

|

| 87 |

+

landmarks *= scale

|

| 88 |

+

|

| 89 |

+

# Convert to list of tuples

|

| 90 |

+

return [(round(float(l[0]), 5), round(float(l[1]), 5), round(float(l[2]), 5))

|

| 91 |

+

for l in landmarks]

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

def create_face_mesh(image_path: Union[str, Path]) -> Tuple[Optional[List[Keypoint]], Optional[np.ndarray]]:

|

| 95 |

+

"""

|

| 96 |

+

Process image to get face mesh data using MediaPipe

|

| 97 |

+

Returns: keypoints, img_bgr or None if failed

|

| 98 |

+

|

| 99 |

+

(Copied from create.py to ensure consistent preprocessing)

|

| 100 |

+

"""

|

| 101 |

+

max_width_or_height = 512

|

| 102 |

+

|

| 103 |

+

mp_face_mesh = mp.solutions.face_mesh

|

| 104 |

+

|

| 105 |

+

# Initialize face mesh

|

| 106 |

+

with mp_face_mesh.FaceMesh(

|

| 107 |

+

static_image_mode=True,

|

| 108 |

+

max_num_faces=1,

|

| 109 |

+

refine_landmarks=True,

|

| 110 |

+

min_detection_confidence=0.5) as face_mesh:

|

| 111 |

+

|

| 112 |

+

# Read image from file

|

| 113 |

+

img_bgr = cv2.imread(str(image_path))

|

| 114 |

+

|

| 115 |

+

if img_bgr is None:

|

| 116 |

+

print(f"Error: Could not read image: {image_path}")

|

| 117 |

+

return None, None

|

| 118 |

+

|

| 119 |

+

# Downscale large images to speed up inference (keep aspect ratio)

|

| 120 |

+

h, w = img_bgr.shape[:2]

|

| 121 |

+

longest = max(h, w)

|

| 122 |

+

if longest > max_width_or_height:

|

| 123 |

+

scale = max_width_or_height / float(longest)

|

| 124 |

+

new_w = max(1, int(round(w * scale)))

|

| 125 |

+

new_h = max(1, int(round(h * scale)))

|

| 126 |

+

img_bgr = cv2.resize(img_bgr, (new_w, new_h), interpolation=cv2.INTER_AREA)

|

| 127 |

+

|

| 128 |

+

# Convert BGR to RGB for MediaPipe processing

|

| 129 |

+

img_rgb = cv2.cvtColor(img_bgr, cv2.COLOR_BGR2RGB)

|

| 130 |

+

|

| 131 |

+

# Process the image

|

| 132 |

+

results = face_mesh.process(img_rgb)

|

| 133 |

+

|

| 134 |

+

if not results.multi_face_landmarks:

|

| 135 |

+

print(f"Error: No face detected in: {image_path}")

|

| 136 |

+

return None, None

|

| 137 |

+

|

| 138 |

+

keypoints = []

|

| 139 |

+

for landmark in results.multi_face_landmarks[0].landmark:

|

| 140 |

+

keypoints.append({

|

| 141 |

+

"x": round(landmark.x, 5),

|

| 142 |

+

"y": round(landmark.y, 5),

|

| 143 |

+

"z": round(landmark.z, 5)

|

| 144 |

+

})

|

| 145 |

+

return keypoints, img_bgr

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

def load_model_resources() -> Tuple[Any, Any]:

|

| 149 |

+

"""Load the trained model and label encoder."""

|

| 150 |

+

if not MODEL_FILE.exists():

|

| 151 |

+

raise FileNotFoundError(f"Model file not found at {MODEL_FILE}. Please run create_model.py first.")

|

| 152 |

+

|

| 153 |

+

if not LABEL_ENCODER_FILE.exists():

|

| 154 |

+

raise FileNotFoundError(f"Label encoder file not found at {LABEL_ENCODER_FILE}. Please run create_model.py first.")

|

| 155 |

+

|

| 156 |

+

print(f"Loading model from {MODEL_FILE}...")

|

| 157 |

+

with open(MODEL_FILE, 'rb') as f:

|

| 158 |

+

model = pickle.load(f)

|

| 159 |

+

|

| 160 |

+

print(f"Loading label encoder from {LABEL_ENCODER_FILE}...")

|

| 161 |

+

with open(LABEL_ENCODER_FILE, 'rb') as f:

|

| 162 |

+

label_encoder = pickle.load(f)

|

| 163 |

+

|

| 164 |

+

return model, label_encoder

|

| 165 |

+

|

| 166 |

+

|

| 167 |

+

def predict_face_shape(image_path: Union[str, Path]) -> Optional[str]:

|

| 168 |

+

"""

|

| 169 |

+

Main function to predict face shape for a given image.

|

| 170 |

+

"""

|

| 171 |

+

# 1. Load Model

|

| 172 |

+

try:

|

| 173 |

+

model, label_encoder = load_model_resources()

|

| 174 |

+

except Exception as e:

|

| 175 |

+

print(f"Failed to load model resources: {e}")

|

| 176 |

+

return None

|

| 177 |

+

|

| 178 |

+

# 2. Process Image (Extract Landmarks)

|

| 179 |

+

print(f"Processing image: {image_path}")

|

| 180 |

+

keypoints, img_bgr = create_face_mesh(image_path)

|

| 181 |

+

|

| 182 |

+

if keypoints is None:

|

| 183 |

+

print("Could not extract landmarks. Exiting.")

|

| 184 |

+

return None

|

| 185 |

+

|

| 186 |

+

# 3. Normalize Landmarks

|

| 187 |

+

h, w = img_bgr.shape[:2]

|

| 188 |

+

normalized_kpts = normalize_landmarks(keypoints, w, h)

|

| 189 |

+

|

| 190 |

+

# 4. Prepare Features (Flatten and drop Z)

|

| 191 |

+

# The model expects a flattened array of [x1, y1, x2, y2, ...]

|

| 192 |

+

flattened_features: List[float] = []

|

| 193 |

+

for kp in normalized_kpts:

|

| 194 |

+

flattened_features.extend([kp[0], kp[1]]) # x, y only

|

| 195 |

+

|

| 196 |

+

# Reshape for sklearn (1 sample, N features)

|

| 197 |

+

features_array = np.array([flattened_features])

|

| 198 |

+

|

| 199 |

+

# 5. Predict

|

| 200 |

+

print("Running prediction...")

|

| 201 |

+

# Get probabilities

|

| 202 |

+

probas = model.predict_proba(features_array)[0]

|

| 203 |

+

# Get prediction

|

| 204 |

+

prediction_idx = model.predict(features_array)[0]

|

| 205 |

+

predicted_label = label_encoder.inverse_transform([prediction_idx])[0]

|

| 206 |

+

|

| 207 |

+

# 6. Show Results

|

| 208 |

+

print("\n" + "="*30)

|

| 209 |

+

print(f"PREDICTED FACE SHAPE: {predicted_label.upper()}")

|

| 210 |

+

print("="*30)

|

| 211 |

+

|

| 212 |

+

print("\nConfidence Scores:")

|

| 213 |

+

# Sort probabilities

|

| 214 |

+

class_indices = np.argsort(probas)[::-1]

|

| 215 |

+

for i in class_indices:

|

| 216 |

+

class_name = label_encoder.classes_[i]

|

| 217 |

+

score = probas[i]

|

| 218 |

+

print(f" {class_name}: {score:.4f}")

|

| 219 |

+

|

| 220 |

+

return predicted_label

|

| 221 |

+

|

| 222 |

+

|

| 223 |

+

def parse_args(argv: Optional[Sequence[str]] = None) -> argparse.Namespace:

|

| 224 |

+

"""

|

| 225 |

+

Parse command-line arguments.

|

| 226 |

+

|

| 227 |

+

Note: Default behavior remains to run against `sample_image.jpg` when no args are provided.

|

| 228 |

+

"""

|

| 229 |

+

parser = argparse.ArgumentParser(description="Predict face shape from an image using a trained sklearn model.")

|

| 230 |

+

parser.add_argument(

|

| 231 |

+

"image",

|

| 232 |

+

nargs="?",

|

| 233 |

+

default="sample_image.jpg",

|

| 234 |

+

help="Path to the input image (default: sample_image.jpg).",

|

| 235 |

+

)

|

| 236 |

+

return parser.parse_args(argv)

|

| 237 |

+

|

| 238 |

+

|

| 239 |

+

if __name__ == "__main__":

|

| 240 |

+

args = parse_args()

|

| 241 |

+

predict_face_shape(args.image)

|

example.webp

ADDED

|

face_shape_model.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:790585e7c236f108d1131d4d295f28f68b2cbe2702f81ba500c5b8a9ec3294f2

|

| 3 |

+

size 1308871

|

label_encoder.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4ed219dcc5c0195ddd7f21aed8a86714ba9bada51c3684961a9331bf072af1d7

|

| 3 |

+

size 283

|

requirements.txt

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio>=4.0.0

|

| 2 |

+

mediapipe==0.10.22

|

| 3 |

+

Pillow>=10.0.0

|

| 4 |

+

opencv-python-headless>=4.8.0

|

| 5 |

+

numpy>=1.24.0

|

| 6 |

+

scikit-learn>=1.3.0

|

| 7 |

+

|

sample_image.jpg

ADDED

|

Git LFS Details

|